普段は仮想化といえば仮想マシンを使うばかりでしたが、コンテナもつかって見たくなったのでKubernetesを入れてみます。

環境としてはESXi6.7で仮想マシンを作りCentOS Stream8をMinimalインストールします。

スペックはCPU4コア、メモリ4GB、ディスク100GBで行きます。

スワップを無効にする必要があるので、スワップをマウントしないようにします。設定したらfreeコマンドなどで確かめましょう。

# vi /etc/fstab

/dev/mapper/cs_container01-swap none swap defaults 0 0

↓

#/dev/mapper/cs_container01-swap none swap defaults 0 0

# free

total used free shared buff/cache available

Mem: 3820688 249896 3115128 8812 455664 3335584

Swap: 8388604 0 8388604

# reboot

# free

total used free shared buff/cache available

Mem: 3820688 229780 3336676 8812 254232 3356220

Swap: 0 0 0

環境の名前解決ができるように、RTX側の簡易DNS機能で設定しておきます。

/etc/hostsを使ってもいいです。

# ip host container01 192.168.1.151 # ip host container02 192.168.1.152 # ip host container03 192.168.1.153 # save セーブ中... CONFIG0 終了

firewalldとselinuxを無効にします。

# systemctl disable firewalld # systemctl stop firewalld # vi /etc/selinux/config SELINUX=enforcing ↓ SELINUX=permissive

iptableの設定とフォワード設定をします。再起動して設定をい反映させたらOS側の諸々は完了です。

# vi /etc/sysctl.d/k8s.conf ↓ ファイルを作って追記します net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 # vi /etc/sysctl.conf ↓ 最後に追記します net.ipv4.ip_forward = 1 # reboot

dockerを入れます。

# dnf config-manager --add-repo=https://download.docker.com linux/centos/docker-ce.repo # dnf install docker-ce # dnf install docker-ce-cli

デーモンを追加して有効化します。

# mkdir /etc/docker

# vi /etc/docker/daemon.json

↓ ファイルを作って追記します

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

# mkdir -p /etc/systemd/system/docker.service.d

# systemctl daemon-reload

# systemctl enable docker

# systemctl start docker

# systemctl status docker

↓

Active: active (running)と出てればOK

コンテナ起動の確認をします。

Hello from Docker!が出てればOKです。

# docker run hello-world

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

次にKubernetesをインストールします。まずはレポジトリに追加です。

# cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg exclude=kubelet kubeadm kubectl EOF

追加したリポジトリを指定して、インストールします。

# dnf install kubelet kubeadm kubectl --disableexcludes=kubernetes

インストールができたらkubeletの自動起動を有効化して、起動します。

# systemctl enable kubelet # systemctl start kubelet

192.168.1.151のノードをマスターとしてkubeadmを起動します。ポッドネットワークはホストのネットワークを避けて10.12.x.xで行きます。(ContainerのCで12)

# kubeadm init --apiserver-advertise-address 192.168.1.151 --pod-network-cidr 10.12.0.0/16

最後に出てくるトークンはノード追加に必要になります。

kubeadm join 192.168.1.151:6443 --token yvwfkl.t0x1kv7hnv7p3v1l \

--discovery-token-ca-cert-hash sha256:b06d2cf00dedc02767ab32ba4879b7f208c3d843c346b4195eb3dd27f35eec95

192.168.1.152と192.168.1.153はさっきのトークンを使って追加します。

# kubeadm join 192.168.1.151:6443 --token yvwfkl.t0x1kv7hnv7p3v1l \

--discovery-token-ca-cert-hash sha256:b06d2cf00dedc02767ab32ba4879b7f208c3d843c346b4195eb3dd27f35eec95

ノードが追加されたことを確認します。NotReadyですが、認識はしています。

# kubectl get node NAME STATUS ROLES AGE VERSION container01 NotReady control-plane,master 6m53s v1.23.3 container02 NotReady <none> 2m2s v1.23.3 container03 NotReady <none> 95s v1.23.3

ネットワークを作ります。

# kubectl apply -n kube-system -f "https://cloud.weave.works/k8s/v1.23.3/net"

そうするとreadyになるはずです。

# kubectl get nodes NAME STATUS ROLES AGE VERSION container01 Ready control-plane,master 14m v1.23.3 container02 Ready <none> 9m37s v1.23.3 container03 Ready <none> 9m10s v1.23.3

これだけだと寂しいので、ダッシュボードをセットアップしてGUIからも見れるようにします。

# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml # kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper ClusterIP 10.109.17.255 <none> 8000/TCP 18s kubernetes-dashboard ClusterIP 10.111.74.154 <none> 443/TCP 18s

このままだと外部アクセスが出ないので、設定を変えます。

# kubectl -n kubernetes-dashboard edit service kubernetes-dashboard

ports:

- port: 443

protocol: TCP

targetPort: 8443

↓ 追記します

nodePort: 30172

type: ClusterIP

↓ 変更します

type: NodePort

追加した「30172」ポート増えとりますね。

# kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper ClusterIP 10.109.17.255 <none> 8000/TCP 21m kubernetes-dashboard NodePort 10.111.74.154 <none> 443:30172/TCP 21m

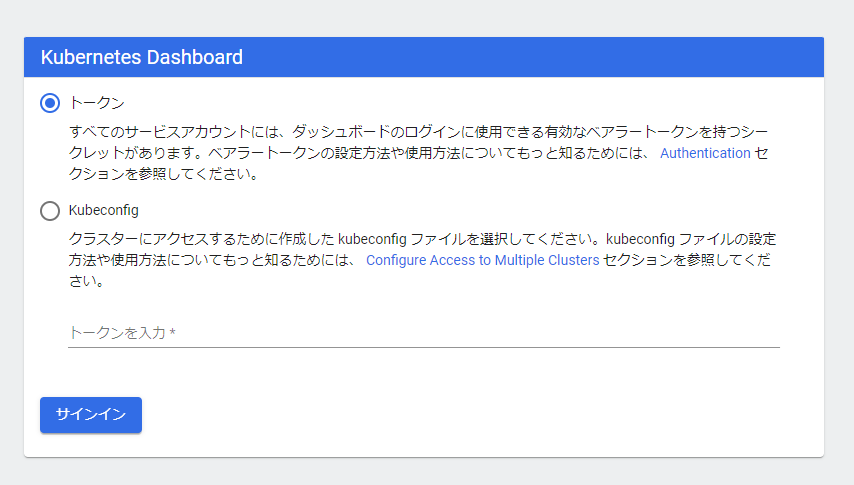

そうすると「https://192.168.1.151:30172/」でアクセスできるようになると思います。

ただ、ログインするためのトークンを要求されるので、以下のコマンドでトークンを作ります。

# vi dashboard-admin.yml

↓ ファイルを作って追記します

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

# kubectl create -f dashboard-admin.yml

# vi dashboard-admin_role.yml

↓ ファイルを作って追記します

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

# kubectl create -f dashboard-admin_role.yml

# kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

最後のコマンドで取得できたトークンを使ってログインするとダッシュボードが見れます。

これで一旦環境としては問題ないはずです。